On November 15, ByteDance’s video generation models PixelDance and Seaweed were officially launched on the Jimeng AI platform and are open to the public for free. Users can experience these two models through the web version or mobile app.

When the PixelDance model generates a 10-second video, the best effect is to switch the camera 3-5 times, and the scene and characters can maintain good consistency. The Seaweed model is good at picture-based videos, with a high degree of restoration of the first frame, smooth and natural pictures, and a sense of reality.

These two models have been polished and continuously iterated in business scenarios such as Cutting and Jimeng AI. They can better serve professional creators and artists and be used in content scenarios such as design, film and television, and animation.

Vinci AI Review

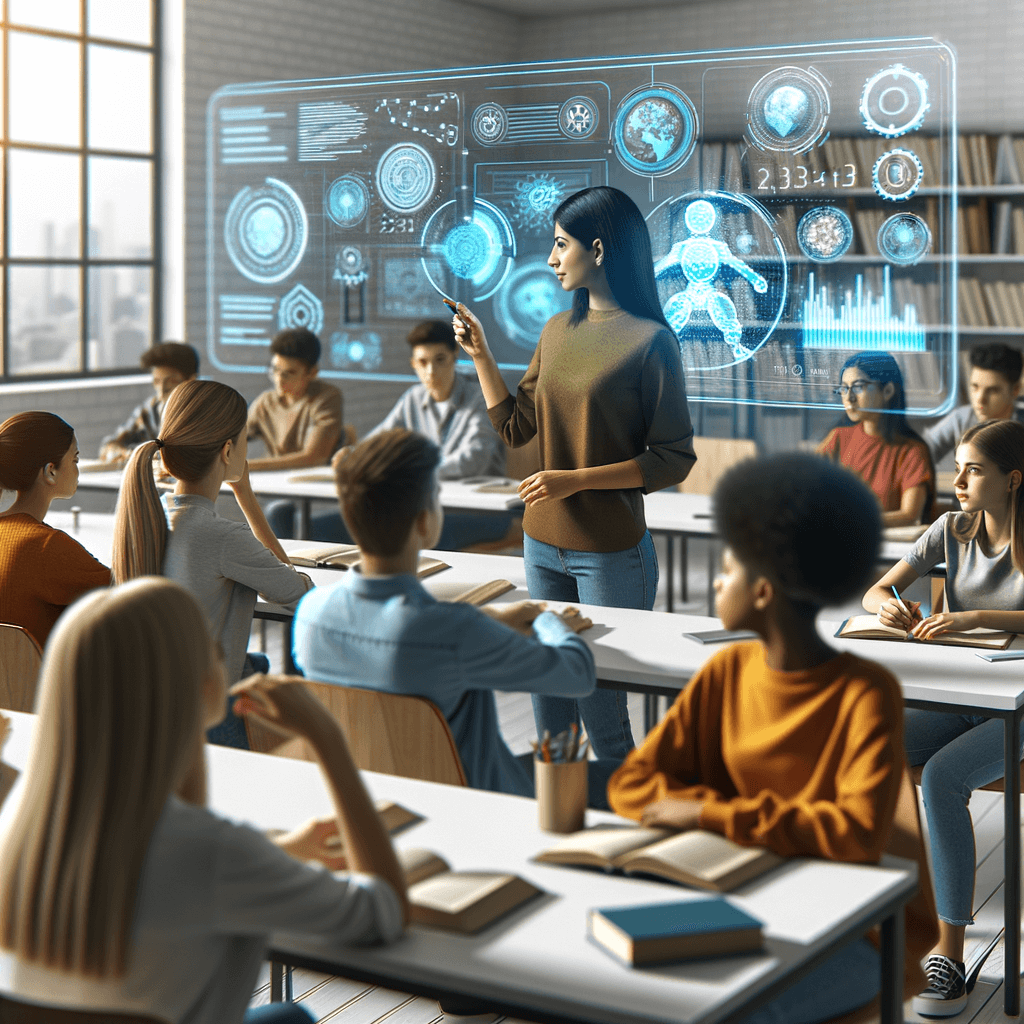

The two video generation models released by ByteDance represent the latest progress in AI generation technology in the video field. The PixelDance model's performance in lens switching and scene consistency, as well as the Seaweed model's advantages in picture-taking videos, all reflect its technological breakthroughs. With the continuous development of AI generation technology, it will be applied in more fields in the future, such as education, entertainment, advertising, etc. For example, the Make-A-Video model released by Meta can generate videos from text or pictures. In the future, how AI video generation technology will develop and how it will be combined with other AI technologies will be a trend worth paying attention to.

link: