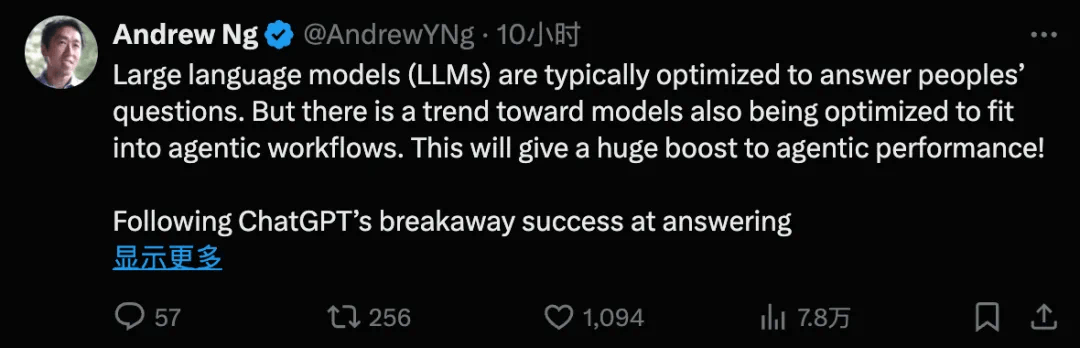

Andrew Ng, an authoritative expert in the field of artificial intelligence, recently pointed out that the development trend of large language models (LLM) is shifting from simply answering questions to integrating into the agent workflow, which will greatly improve the performance of the agent.

Who is Andrew Ng? He is the co-founder of Google Brain, has led the deep learning project in the Google X team, and is also the co-founder of the online education platform Coursera. He is well-known in the fields of machine learning, deep learning and artificial intelligence, and his research results and online courses have influenced millions of people around the world.

In the past, LLMs were primarily trained to answer questions or follow human instructions to provide a good consumer experience. For example, our commonly used chatbots such as ChatGPT, Claude or Gemini learn how to better understand and respond to human needs through a large amount of question and answer data and fine-tuning of instructions.

However, agent workloads require different behavioral patterns. The intelligent agent needs to be like a goal-oriented individual that can actively perform tasks instead of passively waiting for instructions. This requires LLM to be able to reflect on its own output, use tools, write plans, and collaborate in a multi-agent environment to better complete tasks.

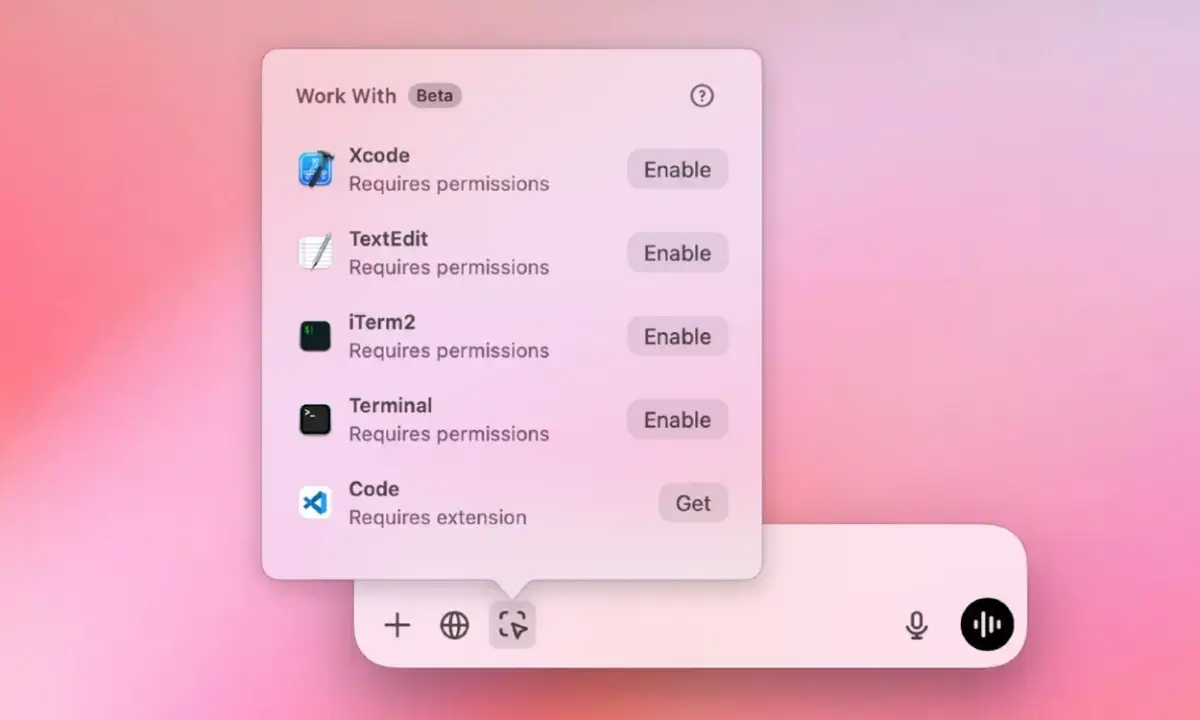

Now, major model manufacturers are increasingly using LLM optimization for AI agents. For example, Google's Bard, Microsoft's Bing Chat, etc. have begun to integrate tool usage and API calling functions, allowing LLM to perform more complex tasks. Anthropic's newly released Claude 3.5 Sonnet can even use a computer like a human, which represents a big step in the direction of LLM's native use of computers.

Ng believes that many developers are prompting LLM to perform the agent behavior they want, and major LLM vendors are also building functions such as tool usage or computer usage directly into their models.

Vinci AI comments:

The integration of LLM into agent workflow is an important trend in the development of AI, which will enable AI systems to perform more complex tasks and play a greater role in various fields. In the future, how the combination of LLM and intelligent agents will develop, and how to deal with challenges such as AI security, will be issues worthy of attention.